Wehmütig blicke ich auf manche technische Errungenschaft vergangener Jahrzehnte zurück, die die Menschheit inzwischen schon lange verloren hat und vielleicht nie wieder zurückerlangen wird. Da ist zum Beispiel die Möglichkeit, am Anrufbeantworter (heute: Voicemail) einzustellen, wie oft er es klingeln lässt, bevor er drangeht. Oder Audiodatenträger (bzw. Abspielvorrichtungen dafür), die sich über beliebig lange Hörpausen hinweg die Abspielposition merken. Vorbei, vorbei.

Archiv der Kategorie: Technik

Unconditionally Make Implicit Prerequisites

I’m pretty new to make so maybe the following is trivial and/or horribly bad practice, but here goes: I have this bunch of output directories, each containing a file called en.tok from which I want to make a corrected version, en.tok.corr. Apart from en.tok, en.tok.corr also depends on the script that applies the corrections, and on a MySQL database that contains the corrections. Since make doesn’t know about databases, I chose to represent the database by an empty file en.tok.db and use touch in a second rule to set its timestamp to that of the latest relevant correction so make knows whether to rerun the first rule:

$(OUT)%/en.tok.corr : $(OUT)%/en.tok $(OUT)%/en.tok.db ${PYTHON}/correct_tokenization.py

${PYTHON}/correct_tokenization.py $> $@

$(OUT)%/en.tok.db :

touch -t $$(${PYTHON}/latest_correction.py $@) $@

But how can I force make to apply that second rule every time? We need to know if there are new corrections in the database, after all. My first idea was to declare the target $(OUT)%/en.tok.db phony by making it a prerequisite of the special target .PHONY, but that doesn’t work since the % wildcard is apparently only interpreted in rules whose target contains it. Thanks to this post by James T. Kim, I found a solution: instead of declaring $(OUT)%/en.tok.db phony itself, just make it depend on an explicit phony dummy target:

$(OUT)%/en.tok.db : dummy

touch -t $$(${PYTHON}/latest_correction.py $@) $@

.PHONY : dummy

My Debian Initiation

Having switched from Ubuntu to Debian Squeeze and pondering ways to combine the security of a largely stable operating system with the additional functionality afforded by individual newer software packages, I recently wondered: Apt pinning seems complicated, why not just add testing sources to sources.list and use apt-get -t testing to get whatever newer packages I need? I can now answer this question for myself: because if you are under the impression that upgrade tools like apt-get and Synaptic are aware of the “current distribution” and will never upgrade beyond that unless explicitly told so, then that impression is wrong, even if apt-get’s occasional “keeping back” packages and the name of the command to override this (dist-upgrade) may suggest it. You will thus inadvertently upgrade your whole system to a non-stable branch. And when you finally notice it, you will then, more out of a desire for purity than out of actual concern for your system’s security, use Apt pinning to try and perform a downgrade. The downgrade will fail halfway through because the pre-remove script for something as obscure as openoffice.org-filter-binfilter has an obscure problem, leaving you with a crippled system and without even Internet access to try and get information on how to resolve the issue. By this point, reinstalling from scratch seems more fun than any other option. And so I did.

Another lesson learned: Do use the first DVD to install Debian, it contains a whole lot of very useful things such as network-manager-gnome or synaptic that are not included with the CD and that are a hassle to install one by one. And there’s also a new unanswered question: why did the i386 DVD install an amd64 kernel?

Unicode Man

Highbrow Java

Here’s examples from my actual code of five lesser-known Java features, in increasing order of how much fun I had discovering they exist.

Anonymous classes

These are fairly well-known, so let’s go for a freaky example – an anonymous class declaration within the head of a (labeled!) for loop:

sentence : for (List children :

node.getOrderedChildrenBySpan(

sentence.getOrderedTerminals(), new Test() {

@Override

public boolean test(Node object) {

return false;

}

})) {

for (Node child : children) {

String tag = child.getLabel().getTag();

if (tag.contains("-SBJ")) {

break sentence;

}

tags.add(PTBUtil.pureTag(child));

}

}

Enums

Boring, I know. I’m mentioning them here for completeness because I found out about them rather late and was like, hey, cool, that’s much nicer and cleaner than working with explicit integer constants.

public enum EditOperationType {

DELETE, INSERT, SWAP, MATCH;

@Override

public String toString() {

switch(this) {

case DELETE:

return "delete";

case INSERT:

return "insert";

case SWAP:

return "swap";

default:

return "match";

}

}

}

Generic methods

Luckily, the following code is no longer live.

@SuppressWarnings("unchecked")

public T retrieve(

Class type, int id) {

return (T) getStoreForType(type).retrieve(id);

}}

Instance initializers

I know I discovered these once thinking I needed a constructor in an anonymous class and wondering how to do this, because how would one declare a constructor in a class without a name? It did not remain in my workspace, however, and I never used an instance initializer again. In the case of anonymous classes, I tend to use final variables outside of the anonymous class, or derive them from classes whose constructors already handle everything I need. So I’m pulling an example from someone else’s code:

_result = new ContainerBlock() {

{

setPanel(_panel);

setLayout(LayoutFactory.getInstance()

.getReentrancyLayout());

addChild(unboundVarLabel);

}

};

Multiple type bounds

public abstract class IncrementalComparator<

T extends HasSize & HasID> {

// ...

}

I wonder what’s next.

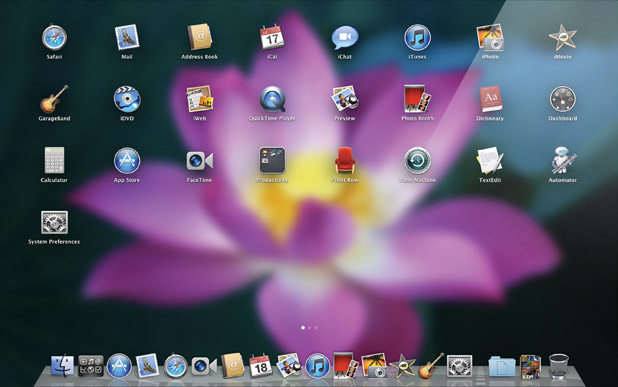

Developments in Desktop Environments, Part 2: The Glorious Future

Yesterday I looked at recent trends in the development of computer desktop environments and noted that the traditional desktop (+ windows + panels + menus) metaphor is being abandoned in favor of a simpler “one thing is on screen at a time” policy as already used in the graphical user interfaces of mobile devices. Like the developers of Mac OS X and GNOME Shell, I too think that the traditional desktop metaphor must die, but I want something completely different to replace it. Here’s some guidelines that should, in my opinion, be followed, to create next-generation desktop environments:

“Navigational” elements like application launchers and overviews over active applications have no business being full-screen monsters by default, as is the case with Mission Control in Mac OS X or with the Activities view in GNOME Shell. There’s nothing wrong with traditional panels and menus. Make them as lean as possible while staying intuitive. I think Windows (with the task bar in Windows 7) and Ubuntu (with the launcher in Unity) are on the right track by adopting the design pioneered by Mac OS X’s dock: frequently used and currently open applications are in the same place. This may first seem dubious conceptually, but it makes more and more sense as applications are becoming more and more state-persisting.

No desktop! When the traditional desktop metaphor dies, make sure the desktop dies with it. Sadly, no major desktop environment seems to be tackling this. The desktop is sort of like a window, but can only be shown by moving all windows out of the way. It lacks a clearly defined purpose and tends to clutter up one way or the other. Get rid of it!

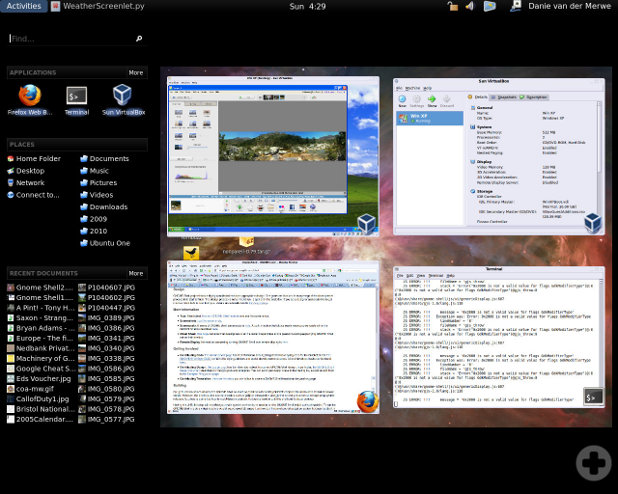

Go tiling! Now that there’s no desktop before which windows can float, windows should float no more. What overstrains users’ (read: my) minds is not more than one window visible at a time. On the contrary, there are many tasks that require working with two applications simultaneously. What annoys users (read: me) is having to arrange windows themselves. Full-screen is a nice feature that moves and resizes one window so that it occupies the whole available screen space. I want that for two or more windows! They should always be arranged automatically to use the screen space optimally. The answer to this plea is tiling window managers, here’s one in action:

Current tiling window managers are for technical users willing to do quite some configuration before it works, do a hell of a lot of configuration before everything works nicely, and memorize a lot of keyboard commands. So far all of this has been putting me off going tiling. There is no reason why it should stay that way. Complex GUIs like those of Photoshop or Eclipse already consist of multiple “subwindows” called views that can be rearranged, docked, undocked, grouped etc. freely using the mouse. The same principle could be applied to the whole desktop, for example in a Linux distribution that makes sure there’s a decent set of standard configuration settings, and that special things like indicator applets and input methods work as we’re used to from the traditional GNOME desktop. Monbuntu, anyone?

Developments in Desktop Environments, Part 1: The Awkward Present

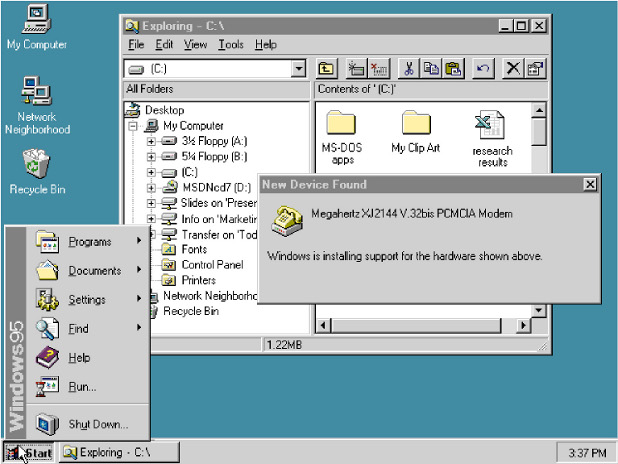

Once upon a time, when computer operating systems learned to multi-task, their basic user interfaces started to reflect this ability: applications now ran simultaneously in a number of windows that could be freely opened, closed, moved around and resized on the screen. This was (an important aspect of what is) called “the desktop metaphor”.

Always-visible gadgets like “task bars”, “docks” and “menu bars” were introduced for basic tasks like managing open windows and opening new ones.

It took for the advent of super-user-friendly mobile devices (limited multitasking ability, limited screen space) for developers to notice that unlike modern desktop computers, people aren’t actually very good at multitasking. At least for the tasks they do with mobile devices, people are perfectly happy with only having one window, or menu, open at a time.

This trend is now coming to the desktop computer. Apple recently announced a new release of Mac OS X, explicitly stating that many of the new features are inspired by the iPhone and the iPad. The most striking one is Launchpad. It is nothing more than a menu of all available applications, but one that takes up the whole screen. Together with Dashboard and Exposé (now called Mission Control), that’s quite a long list of special-purpose full-screen gadgets taking over window managing/application launching functions traditionally fulfilled by task bars etc. And together with Mac OS X’s new full-screen apps (not quite your traditional maximized windows), it quite clearly marks a turn toward a one-window-is-visible-at-a-time principle.

A similar thing is going on in GNOME Shell. They are cramming everything for which there used to be panels and menus into one full-screen view called Activities, including Exposé-like overviews of the desktop(s). If the multitude of full-screen gadgets in Mac OS X seems confusing, the GNOME approach of cramming so many things into one full-screen view seems bizarre. If upon clicking a button with the ultimate goal of, say, firing up the calculator, the contents of the whole screen change and hide my currently open windows, I consider this a high price to pay. In return, there should at least be a gain in focus, as with the Mac OS X gadgets, each of which shows more or less one kind of thing only.

So what is a desktop environment developer to do if she really wants to advance the state of the art instead of just haphazardly introducing new misfeatures (or taking it slow with moving away from the traditional desktop metaphor, as Microsoft does)? Is there a happy medium between overview and focus? Bear with me for Part 2: The Glorious Future.

text/html decoder

Stallman/Sproull

Sie haben jetzt die Geschichte des Hackers Mark Zuckerberg verfilmt, dabei ist doch die von Richard Stallman viel eher movie material. Die (legendenhafte) Schlüsselszene zu Beginn wäre natürlich die, in der Stallman um 1980 herum kasual in Professor Robert Sproulls Büro an der Carnegie Mellon University vorbeitropft, um den Quellcode eines bestimmten Xerox-Druckertreibers bittet und wie vom Donner gerührt ist, als seine Bitte entgegen allen Gewohnheiten abgeschlagen wird: Der Treiber ist „proprietär“, die Entwickler haben zugesichert, den Quellcode nicht herauszugeben. Also die Art von Software, mit der dann Bill Gates ein Vermögen verdiente, mit der die meisten von uns in den Neunzigern und Nullzigern aufgewachsen sind und die manche dann entnervt größtenteils hinter sich ließen, als sie von Windows zu Linux wechselten.

Ich würde die Szene so gestalten: Stallman natürlich in speckiger Jeans, verwaschenem T-Shirt und den ganzen Kopf voll langer Haare, Sproull im Dreiteiler mit akkurat gescheitelten Haaren. Während das Gespräch von einem lässigen, kollegialen Plausch in die Keimzelle eines Kriegs der Welten übergeht, fliegen dramatische Schnitte zwischen beiden Männern hin und her, untermalt von Paukenschlägen, die Kamera kommt den Gesichtern immer näher. Kalte Sachlichkeit in Sproulls Gesicht, in die sich dann ein Funken süffisanter Schadenfreude mischt, während die Züge seines hippiehaften Gegenübers von Unverständnis über Entsetzen in nackten Zorn übergehen.

Am Ende sieht man den Mann mit dem wilden Bart aus der Vogelperspektive, wie er die Fäuste gen Himmel reckt und einen Urschrei ausstößt – der Moment, in dem Richard Stallman beschließt, sein Leben von Stund an dem Kampf für Freie Software zu widmen.

LaTeX, PSTricks, pdfTeX and Kile

Many people writing scientific documents in TeX know the problem: on one hand, you want to use the more modern pdfTeX rather than LaTeX, for example because of the hyperref package or because you want to include pdf, png or jpg images. On the other hand, pdfTeX fails to produce figures that make use of PostScript, as is the case when using the very useful PSTricks. There are a number of possible ways around this problem. What is working well for me right now is a solution kindly provided by Wolfgang. Create PSTricks figures as separate documents using the following skeleton:

\documentclass{article}

\usepackage{pstricks}

\pagestyle{empty}

\begin{document}

% your graphics here

\end{document}

Then autocrop the document and save it as a PDF file using the following shell script:

#!/bin/sh latex $1.tex dvips $1.dvi ps2eps -l --nohires -f $1.ps ps2pdf -dEPSCrop -dAutoRotatePages=/None -dUseFlateCompression=true $1.eps

Then you can include the graphics (in PDF format) from your main document, which you build using pdfTeX.

Since I use Kile for writing in TeX, I replaced the shell script with a build tool which I called IlluTeX. It amounts to the following additions in ~/.kde/share/config/kilerc:

[Tool/IlluTeX/Default] autoRun=no checkForRoot=no class=Sequence close=no jumpToFirstError=no menu=Compile sequence=LaTeX,DVItoPS,PStoEPS,PStoPDFEPSCrop,ViewPDF state=Editor to=pdf type=Sequence [Tool/PStoEPS/Default] class=Convert close=no command=ps2eps from=ps menu=Compile options=-l --nohires -f '%S.ps' state=Editor to=eps type=Process [Tool/PStoPDFEPSCrop/Default] class=Convert close=no command=ps2pdf from=eps menu=Compile options=-dEPSCrop -dAutoRotatePages=/None -dUseFlateCompression=true '%S.eps' '%S.pdf' state=Editor to=pdf type=Process